|

Email: bduister@cmu.edu Bio I am a Ph.D. student at Carnegie Mellon University (CMU), in the Robotics Institute advised by Jeffrey Ichnowski and frequently collaborate with Deva Ramanan and Bowen Wen. I was previously a research intern in the DUSt3R Group at NAVER Labs Europe, advised by Jérome Revaud and Vincent Leroy. My interests lie at the intersection of perception and robot manipulation of challenging objects, such as (transparent) deformables. I am a recipient of the 2023 CMLH Fellowship in Digital Health Innovation, and the 2024 CMLH Fellowship in Generative AI in Healthcare. During my first year at CMU I worked with Sebastian Scherer , where we focused on geometric camera calibration. Prior to that I completed a Bachelor's and Master's degree in Aerospace Engineering at Delft University of Technology, in the Netherlands. Advised by Guido de Croon, I studied efficient bio-inspired algorithms for fully autonomous nano drones. In 2019 I was a visiting student at Vijay Janapa Reddi's Edge Computing lab, at Harvard University, where we studied Deep Reinforcement Learning for tiny robots. I am passionate about creating a future where complex robotic automation is scalable, safe, and beneficial. Students I am always looking for collaborators, shoot me an email if you would like to work with me!Google Scholar / Twitter / Bluesky / LinkedIn / Github |

|

|

|

|

|

|

|

Bardienus P. Duisterhof, Jan Oberst, Bowen Wen Stan Birchfield, Deva Ramanan, Jeffrey Ichnowski NeurIPS 2025 project website / code / X thread Imagine if robots could fill in the blanks in cluttered scenes. Enter RaySt3R✨: a single masked RGB-D image in, complete 3D out. It infers depth, object masks, and confidence for novel views, and merges the predictions into a single point cloud. |

|

Bardienus P. Duisterhof*, Lojze Zust*, Philippe Weinzaepfel, Vincent Leroy, Yohann Cabon, Jérome Revaud International Conference on 3D Vision (3DV) 2025, Oral, Best Student Paper Award arxiv / code MASt3R for SfM with 1000+ unordered images! We contribute a memory-efficient algorithm which leverages the MASt3R encoder for image retrieval without any overhead. MASt3R-SfM has an overall linear complexity in the number of images, and can handle any set of ordered or unordered images. |

|

|

Bardienus P. Duisterhof, Zhao Mandi, Yunchao Yao, Jia-Wei Liu, Jenny Seidenschwarz, Mike Zheng Shou, Deva Ramanan Shuran Song, Stan Birchfield, Bowen Wen, Jeffrey Ichnowski Proc. Algorithmic Foundations of Robotics (WAFR) 2024 project website / arXiv / data / code / X thread Deformable objects are common in household, industrial and healthcare settings. Tracking them would unlock many applications in robotics, gen-AI, and AR. How? Check out DeformGS: a method for dense 3D tracking and dynamic novel view synthesis on deformable cloths in the real world. |

|

|

Alberta Longhini*, Marcel Büsching*, Bardienus P. Duisterhof, Jens Lundell, Jeffrey Ichnowski, Mårten Björkman Danica Kragic Conference on Robot Learning (CoRL) 2024 project website / paper We present Cloth-Splatting: a method for accurate state estimation of deformable objects from RGB supervision. Cloth-Splatting leverages a GNN as a prior to improve tracking accuracy and speed up convergence. |

|

|

Jenny Seidenschwarz, Qunjie Zhou, Bardienus P. Duisterhof, Deva Ramanan Laura Leal-Taixé International Conference on 3D Vision (3DV) 2025 arXiv / project website Online 3D tracking can unlock many new applications in robotics, AR and VR. Most prior works have focused on offline tracking, requiring an entire sequence of posed images. Here we present DynOMo, a method for simultaneous 3D tracking, 3D reconstruction, novel view synthesis and pose estimation! |

|

|

Bardienus P. Duisterhof, Yuemin Mao, Si Heng Teng, Jeffrey Ichnowski IEEE International Conference on Robotics and Automation (ICRA) 2024 🌟Spotlight🌟 presentation at the ICCV23 - TRICKY Workshop project website / arXiv / video / code In this work, we propose Residual-NeRF, a method to improve depth perception and training speed for transparent objects. Robots often operate in the same area, such as a kitchen. By first learning a background NeRF of the scene without transparent objects to be manipulated, we improve depth perception quality and speed up training. |

|

|

Hajo H. Erwich, Bardienus P. Duisterhof, Guido de Croon IEEE International Conference on Robotics and Automation (ICRA) 2024 project website / paper / video / code Gas-source localization is an important task for autonomous robots. We present GSL-Bench, the first standardized benchmark for gas-source localization. GSL-Bench uses NVIDIA Isaac Sim for high visual fidelity, and OpenFOAM for realistic gas simulations. |

|

|

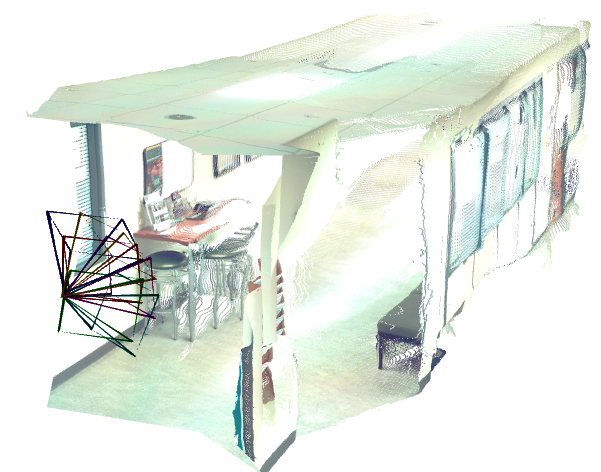

Bardienus P. Duisterhof, Yaoyu Hu, Si Heng Teng, Michael Kaess, Sebastian Scherer project website / arXiv / video / code In this work we present our methodology for accurate wide-angle calibration. Our pipeline generates an intermediate model, and leverages it to iteratively improve feature detection and eventually the camera parameters. |

|

Bardienus P. Duisterhof, Shushuai Li, Javier Burgués, Vijay Janapa Reddi, Guido C.H.E. de Croon IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2021 arXiv / video / code We have developed a swarm of autonomous, tiny drones that is able to localize gas sources in unknown, cluttered environments. Bio-inspired AI allows the drones to tackle this complex task without any external infrastructure. |

|

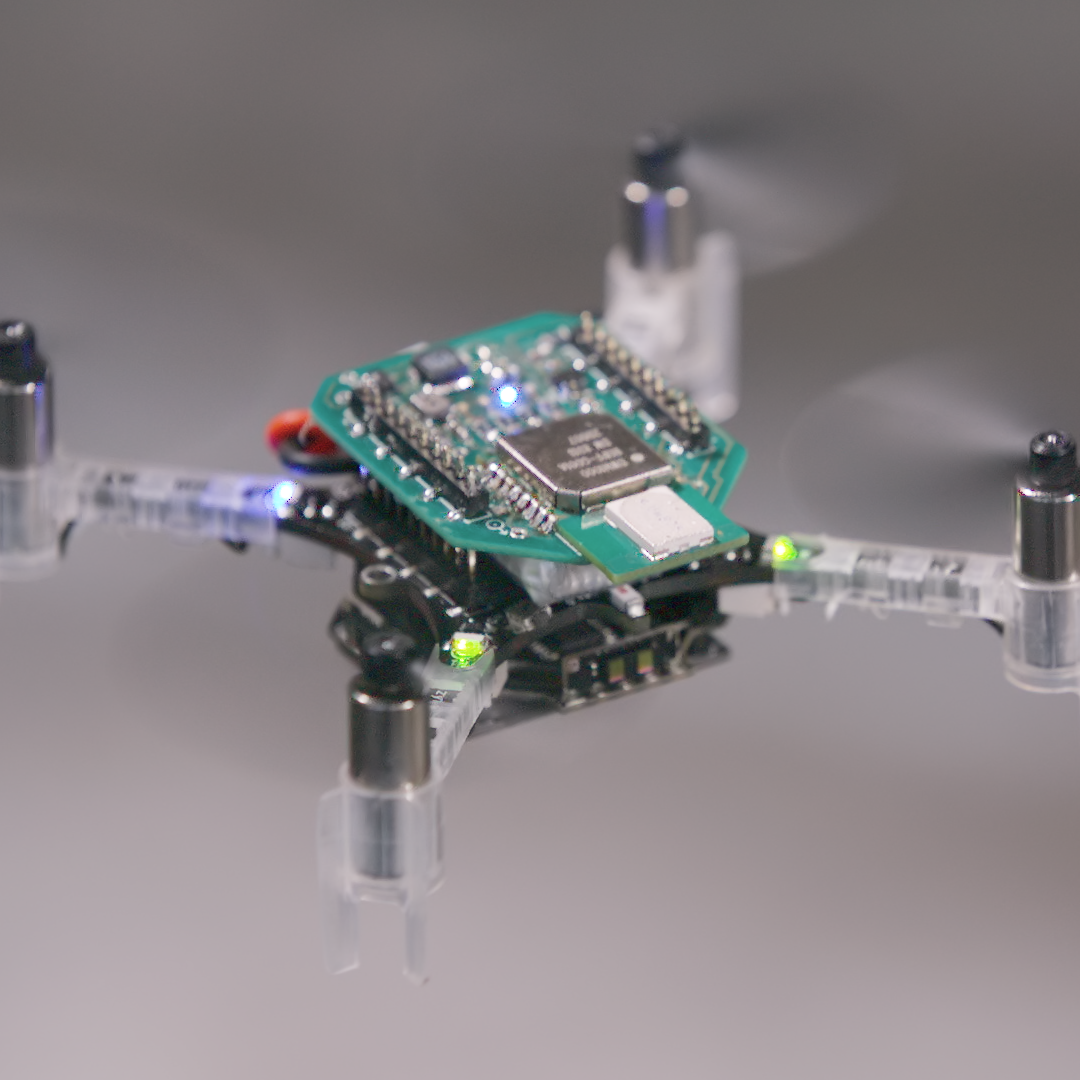

Bardienus P. Duisterhof, Srivatsan Krishnan, Jonathan J. Cruz, Colby R. Banbury, William Fu, Aleksandra Faust, Guido C.H.E. de Croon, Vijay Janapa Reddi IEEE International Conference on Robotics and Automation (ICRA) 2021 paper / video / code We present fully autonomous source seeking onboard a highly constrained nano quadcopter, by contributing application-specific system and observation feature design to enable inference of a deep-RL policy onboard a nano quadcopter. |

|

Diana A. Olejnik, Bardienus P. Duisterhof, Matej Karásek , Kirk Y. W. Scheper, Tom van Dijk, Guido C.H.E. de Croon Unmanned Systems, Vol. 08, No. 04, pp. 287-294 , 2020 paper / video This paper describes the computer vision and control algorithms used to achieve autonomous flight with the ∼30g tailless flapping wing robot, used to participate in the International Micro Air Vehicle Conference and Competition (IMAV 2018) indoor microair vehicle competition. |

|

|

| 16-820 at CMU: Advanced Computer Vision |

| 16-720 at CMU: Introduction to Computer Vision |

| AE2235-I: Aerospace Systems & Control Theory |

|

|

|

Forbes IEEE Spectrum Video Friday Robohub Bitcraze Blog PiXL Drone Show |

|

|

|

Modified version of template from here, and vibe-coded with Cursor. |